What will affect search results in the future? The very idea behind this article is based on the Law of Accelerating Returns proposed by Raymond Kurzweil. According to it, humankind advances faster and faster thanks to increasing growth opportunities. To understand this theory, let’s go “Back to the Future”.

Marty McFly traveled from 1985 to 1955 and was taken aback by the prices of water and no love for electric guitars. If the plot were to be taken to 2015 and McFly were to go back to 1985 (the same 30 years back), he would find himself in a world untouched by the Internet, smartphones, drones and many other things invented by people over that period of time. If Kurzweil’s right, we’ll grow more in 2015–2030 than in 1750–2015 (meaning that man will achieve more in 15 years than in the last 275 years). Such rapid progress will have an effect on each and every industry and sector, including SEO. That’s why I think there will be no better time to ponder what’s next because change might come sooner than we expect. I singled out 5 trends which in my opinion will affect search results in the future.

#1 Artificial Intelligence

Every day brings new information about artificial intelligence. It’s supposed to replace people in many industries and it’s been already present in our lives: AI handpicks music for us on Spotify and movies on Netflix. It’s also used in search results as the RankBrain algorithm. It’s not totally clear yet what precisely RankBrain does, but we can easily predict what it’ll be doing soon.

AI has been classified in a lot of ways, for example as follows:

- a) Controlled AI, which draws conclusions and acts based on analyses of available historical data

- b) Uncontrolled AI, which makes its own decisions without any historical data analyses.

RankBrainis a type of controlled AI – it can process a lot of historical data, numerous examples of good and bad practices in SEO, it just need to multiply them. However, introduce uncontrolled AI into search results and you may completely turn them around. Currently, about 600 changes are made to the algorithm every year. Real-time uncontrolled AI could generate new rank factors and those 600 changes could happen in an hour, not a year.

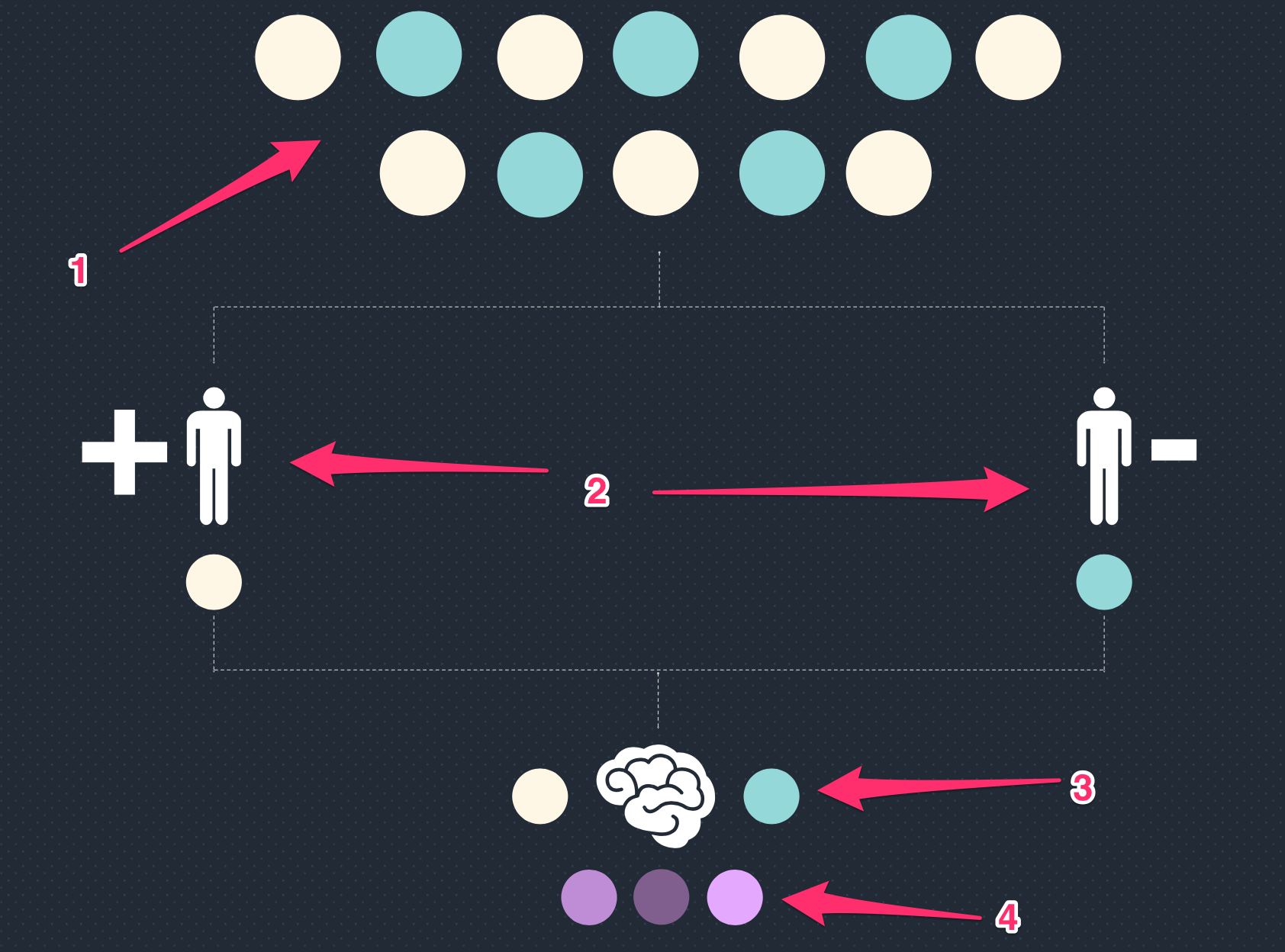

Let’s take a closer look at how AI can work within search results.

- You’ve got a data set, say a data set in two types of link.

- Google’s Search Quality Team and Disavow Tool have gathered information on good and bad links for many years; AI has now a vast knowledge of how good links and bad links look like.

- It’s easy for AI to analyze subsequent links basing on historical data and to decide which are good and which are bad; this means it can freely analyze a link profile of a domain and decide whether to penalize links or not. For now, Google uses the non-AI Penguin algorithm to that end, but sooner or later it will be AI-enhanced.

- Having a set of good and bad links, it can also freely bring about totally new determinants of whether a link is good or bad. Therefore, Google engineers won’t have to tell such algorithms what makes a link good or bad – AI will do it on its own.

We’re not entirely sure how Google’s AI works, but we know what it’s capable of, considering other companies’ results. Let me show you a couple of things which are already possible and theoretically employable by Google, using IBM Watson AI.

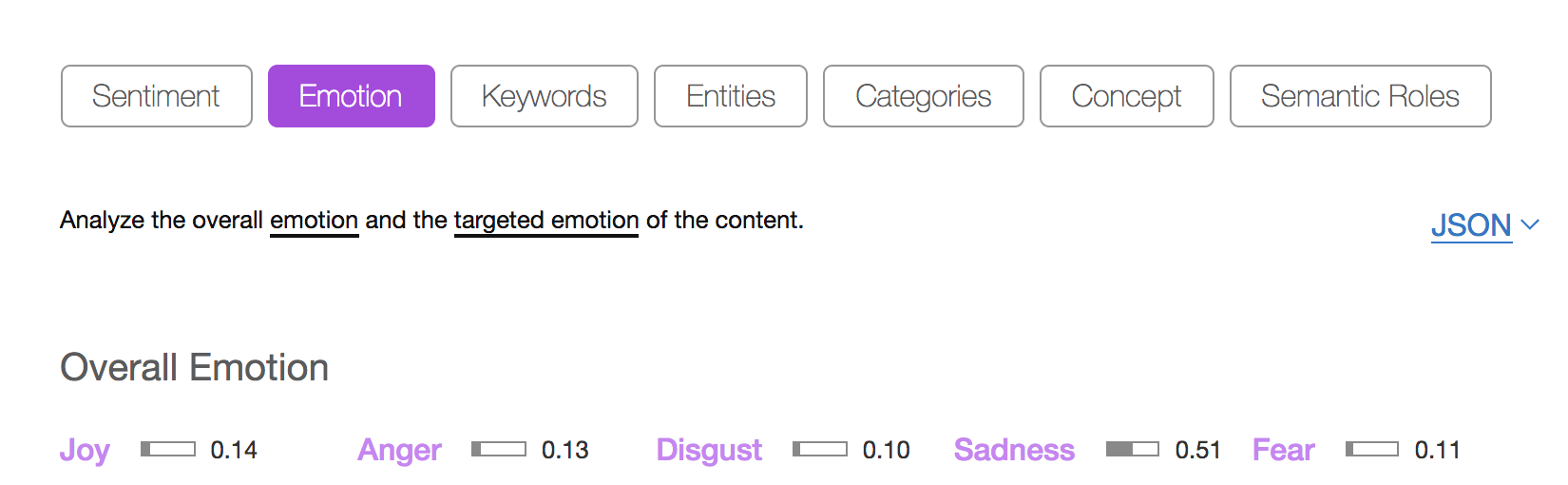

Recognizing emotions

Just paste a piece of text and IBM Watson will identify emotions conveyed in it.

Google could use information on your current mood and suggest you tailored search results. If it thought you’re sad, it wouldn’t display content triggering sadness.

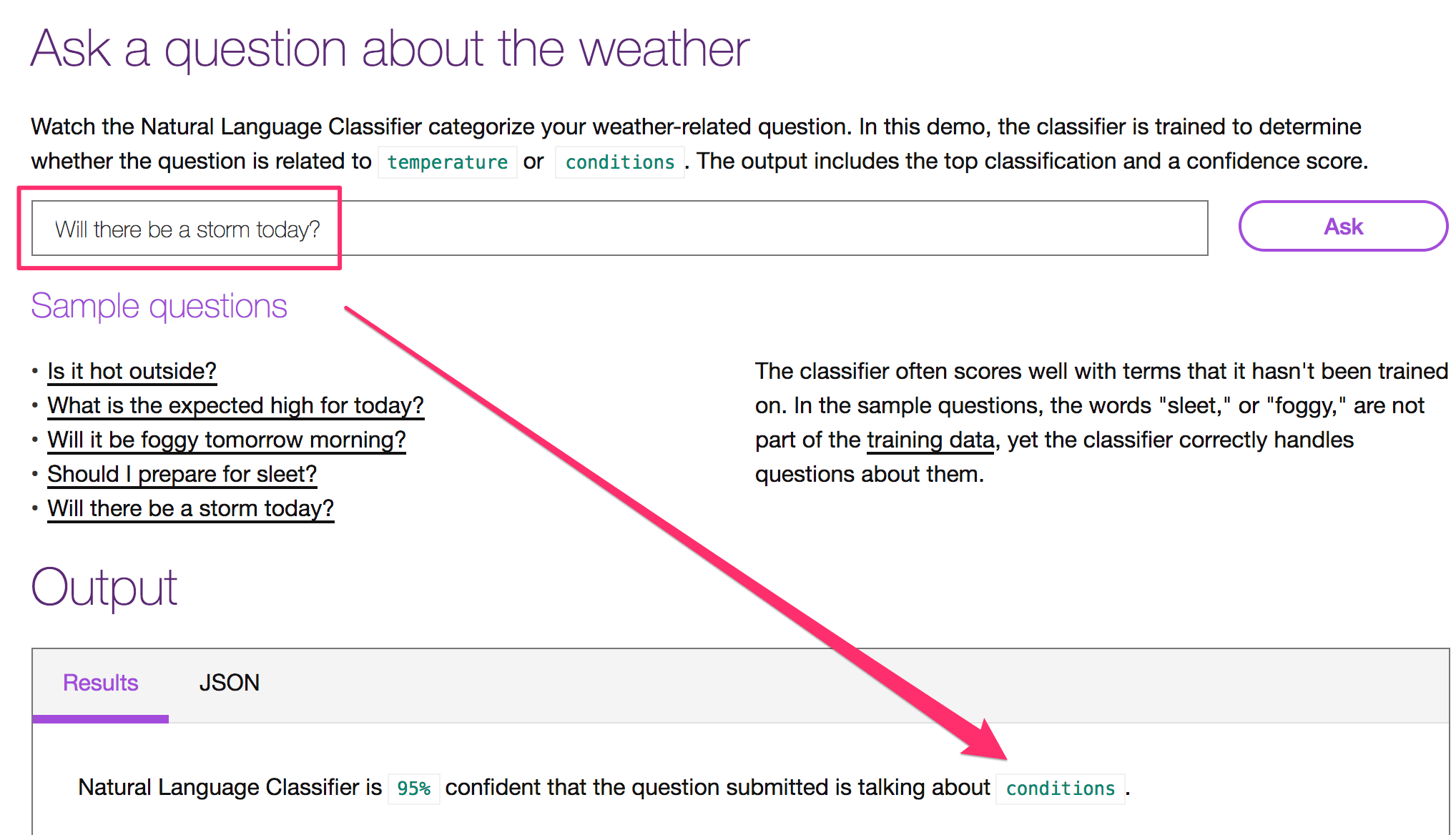

Recognizing intentions

Check out the demo>> Recognizing intentions

Based on a query in the natural language, AI will recognize your intentions.

The query is about a storm and Watson responds that it’s about weather conditions and not temperature. Communication with the search engine in the natural language and not using keywords will be essential in the future. Google has been working on semantics for a long time now, so their AI is definitely proficient and knowledgeable already.

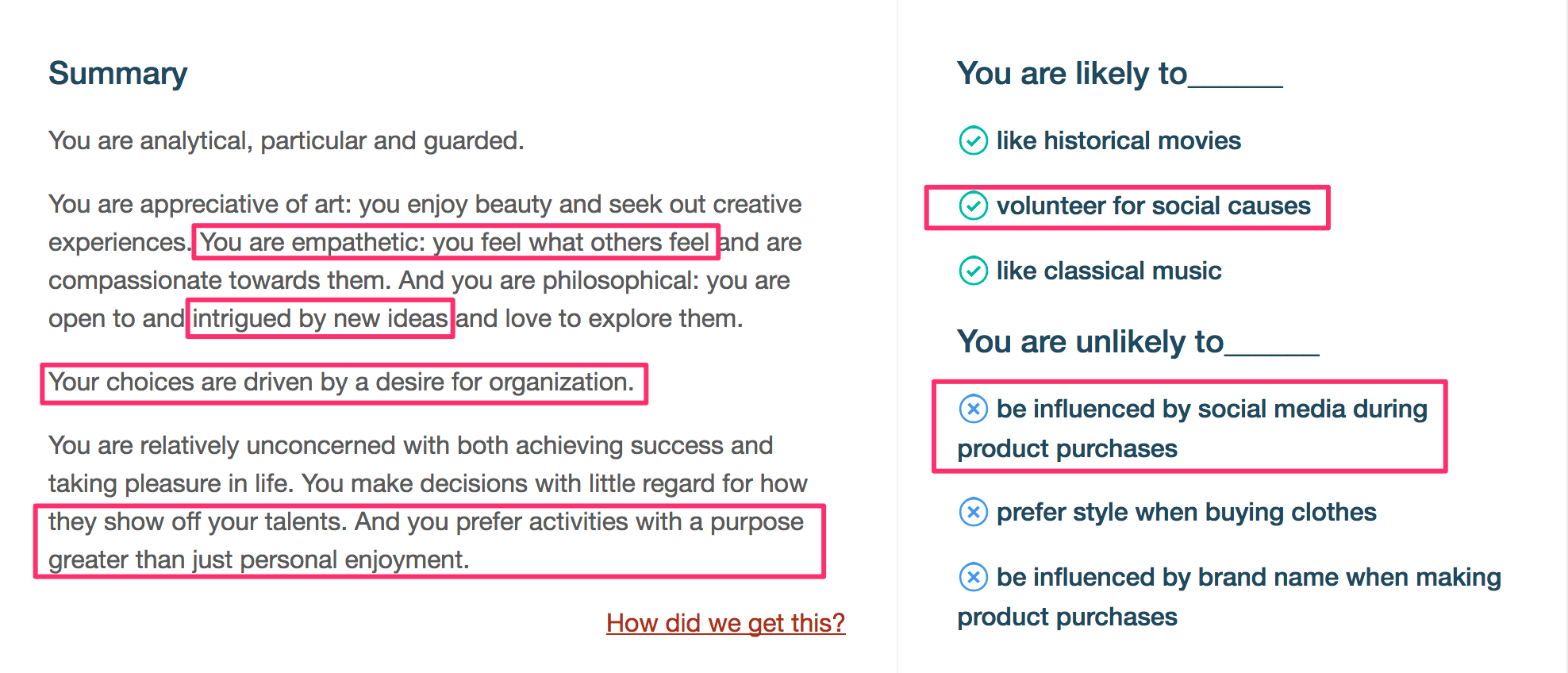

Recognizing personality

Check out the demo>> Recognizing personality

Digging through 100 words, Watson is able to pinpoint the personality of a person who wrote it. I tested the feature by pasting “I had a dream” by Martin Luther King.

I think AI excellently managed to recognize the personality of Dr. King, arriving at thefollowing conclusions, among other things:

- You are empathetic

- You are intrigued by new ideas

- Your choices are driven by a desire for organization

- You are unlikely to be influenced.

All of that lead to a single conclusion: personalization will be brought to a new level. Google has been personalizing search results since 2009, but I’m referring here to different personalization – tailoring results to individual users.

Truth to be told, everyone can have their own personal Google and search results will be suited to one’s personality or current mood.

Multidimensionality of the algorithm

Nowadays, SEO experts have bigger and bigger problems with analyzing reasons for websites moving up or down in search results. When in 2013 Penguin was introduced into search results, changes were obvious. Whether you ran a website about credits or medicine, given operations resulted in the same effects. Approaching such analyses vertically is now pointless because the algorithm has become multidimensional. The search engine algorithm includes a few hundred determinants of positioning in search results. Presently, AI can choose a unique set of determinants for every query or category of queries. One industry may find the “title” tag most important, the other – the H1 heading.

Therefore, if everyone in your industry uses link exchange systems, doing the same should not end with a penalty, but if you use such a system in an industry where no one does that, then AI will be able to very quickly spot a deviation from the standard and subsequently penalize you.

At SMX in 2016, Google admitted that they were not completely sure about how RankBrain worked. Paul Haahr said:“We don’t fully understand RankBrain.”But it absolutely doesn’t mean they don’t know what they’re doing! For example, RankBrain selects a group of signals affecting the rank on its own, depending on an industry, and this resulted in said multidimensionality of the algorithm, making the vertical approach useless. We, the SEO industry, can predict behaviors of AI in a given situation. There are already being developed such tools as Market Brew which, with the help of AI, can predict behaviors of Google’s AI in response to specific changes in a website or an external environment.

#2 Voice search

Probably everyone knows such devices as Amazon Echo, Google Home or HomePod (recently revealed by Apple).

I believe that we’ll reach a certain milestone in voice searching when these devices will be commonly used. Currently, search by voice is not a popular subject, though it’s discussed not only behind the closed door. In the US, 41% of adults and 54% of teenagers use this feature on a daily basis. In 2014, Google published an interesting report about it: check it out.

The basic aspect of search by voice is how you communicate with the search engine – it’s different as we use the natural language.

So, when search by voice is different, optimization efforts in this respect should also be different. Below are a few recommendations:

Answer questions

As you can see in the picture above, when speaking the natural language, you ask more questions when compared to search by text. Therefore, you should optimize your content question-wise.

Used structured data

Structured data give the search engine a context. If a user asks about a “tomato soup”, structured data hint the search engine if the user is a restaurant serving this soup or a website with a recipe for it.

Useful links:

Schema.org –a description of structured data implementation.

Data Marker in Search Console –a solution allowing for implementation of structured data without tampering with the source code.

Structured Data Testing Tool –check if Google correctly reads structured data you implemented.

Create FAQ

This increase in question queries should make you consider creating a Frequently Asked Questions section.

#3 SEO&UX

SEO itself will cease to exist in the future; it’ll need to be incorporated in a bigger marketing plan. It’s already been combined with other areas of marketing for quite some time.

It was blended with Content Marketing, Public Relations, and Social Media. A new combination is UX (User eXperience) – it’ll definitely be as important as the three above.

SEO specialists have been talking about UX as a rank factor for many years and indeed, such factors as page loading speed or mobile friendliness play bigger and bigger roles, but these are objective factors. Soon, Google will be able to introduce subjective factors – the company is well-equipped to analyze websites in terms of user friendliness.

They have Android which accounts for 64% of the smartphone market. They have Chrome which accounts for 60% of the web browser market. They also have Google Analytics used by around 60mnwebsites. Finally, they have AI that draws from data gathered by the three aforementioned tools and is capable of generating totally new UX factors affecting SEO. That’s why there are and there will be developed new measures, such as:

- Depth of visits –the search engine will measure how many pages users browse when visiting a website (check the website parameter/ sessions in Google Analytics)

- % of returns to search results– Google knows how often users go back to search results after clicking a given result

- CTR in search results –if, despite being lower in search results, your result gets a higher CTR, your rank may increase. Google uses AI also here to predict a Click Through Rate. In addition, it uses itto estimate CTR for AdWords (check out Rand Fishkin’scase study regarding the impact of CTR onsearch result rankings)

- Time spent on a website

- Over-advertising (Google’s been talking about it since 2016: check out).

With these changes, we can speak of the “Two Algorithm World” discussed by Rand Fishkin. We will have to optimize for two types of algorithms.

So far, in on-site optimization, we have optimized input-wise in terms of keywords typed in by a user. In new on-site optimization we’ll focus on output – a moment when a user has already clicked the search result. We’ll optimize UX factors leading tothe new measures which I listed earlier. Therefore, SEO audits should be already combined with UX audits.

It is also noteworthy that by using Artificial Intelligence, Google can develop completely new UX factors influencing SEO. If AI decides that illnesses on medicine-related websites are best described in a table, it can make such an arrangement of content a ranking determinant for similar websites. Naturally, it’s still in the future, though not so distant as we may think.

#4 Binary information

If your website contains binary information, meaning information that can be addressed directly, youcan soon enough encounter problems with generating traffic on it. You need to understand that Google doesn’t benefit from referring users to your website.

Eric Schmidt emphasized already in 2014 that we shouldn’t expect the search engine to generate traffic (click here to read the entire article) because the search engine, if possible, would answer a user’s query directly in search results. In other situations, it is responsible for where a user is referred to. If it refers the user to a non-UX-optimized website, the user judges the search engine as well, to a certain extent, and let’s not forget that Google doesn’t work in a vacuum. Having secured an absolute monopoly in Poland doesn’t mean it can rest on its laurels. The company has been for a long time extending knowledge graphs which change search results a little bit (you can read here what we think about the current graph data in search results).

The user instantly gets an answer to his or her question and doesn’t need to leave the search engine. It’s particularly important from the perspective of searching by voice – voice interfaces exclude browsing websites. These days over 50% of search results include such or similar knowledge panels.

But knowledge panels tend to be an opportunity as well. For example, there are Direct Answers. In short, a Direct Answeris a response to a specific question given in search results, taken from external websites.

#5 Semantic SEO

In August 2013, Google rolled out the Hummingbird algorithm. It’s not an update to Panda or Penguin, but rather a turnaround, with the previous two algorithms having only become its part. It was a Google’s big step towards semantics; it’s about recognizing intentions and a context of a query. Google wants to know if by typing in panda you meant an animal or antivirus software. The algorithm change should also translate into a change in content creation.

CONTEXT

INTENTIONS

KEYWORDS

Now you should focus on the intentions and context of a query rather than on keywords.

With intensions of a user in mind, you should look around. If Google usually responds to a query worded “laptops up to $8000” by displaying in search results blog articles showing laptop rankings, then don’t expect an online store website with a price filter set in the “laptops” category to get into those search results. When it comes to context, focus on the following:

- Concentrate on topics, not on phrases –we wrote about the so-called topical authority here

- Contextual external linking – if a user visited your website through the phrase “diabetes”, do not suggest information on “Barack Obama” to him or her,e.g. washingtonpost.com–suggest such content to the user that will help him or her stick to information about diabetes

- Structured data – they also feed the search engine more data about given content (go back to the example of tomato soup above).

Communicating vessels

Do you see that all the aforementioned elements are connected with each other?

I think that the future of search results holds AI at the core, pushing everything forward. What’s more:

- Voice search requires strong semantics – Google needs to understand the natural language and the context of one’s query for this technology to work properly

- Mobile & voice need UX – only well-optimized websites in terms of UX can be really mobile- and voice-friendly

- Voice needs graphs – knowledge graphs will allow such assistants as Google Home to communicate with the user solely via a voice interface, which seems to be necessary.

Conclusions

“What does the future of SEO hold?”, “Will SEO ever die?” –I’m asked that very often. In my opinion, in a world advancing so rapidly – and it’ll advance even faster – we don’t have the luxury of being afraid. SEO will become more and more technical, which will surely consolidate the market (providers of low-quality services will be forced out). It’s possible that SEO will be useless for small businesses – speaking of AI, I can’t imagine a plumber being able to afford such a complex service, but big institutions will use SEO services for years, since a possible ROI is presently very high and even a decrease in it will not make SEO unprofitable.

Damian Sałkowski

Damian Sałkowski